What You Need to Know About Audio Time Management for Radio | Telos Alliance

By The Telos Alliance Team on Mar 26, 2014 5:21:00 PM

What You Need to Know About Audio Time Management for Radio

What You Need to Know About Audio Time Management for Radio

The first audio delays were tube based.

But did you know that those tubes were garden hoses with a speaker at one end and a microphone at the other? The hose’s length was calculated at one foot per 1ms of desired delay. As you can imagine, it did not sound good at all.

Today, radio broadcasters can take advantage of sophisticated digital technologies for audio delay, as well as for shrinking and stretching program material.

In this post, we’ll take a look at some of the historical highlights and where persistence and innovation have gotten us today.

Tape delays for radio call-in

Early FCC rules prohibited placing phone callers on the air live. Several online sources credit Frank Cordaro of WKAP, Allentown, PA, with inventing the tape delay.

A tape machine was modified to increase the tape path between the record and playback heads, so stations could claim the call was recorded and bypass the FCC requirement. As call-in became more widespread, the tape delays, using reel-to-reel or endless-loop cartridge machines were used. Station engineers would “McGyver” a system where operators could hit a DUMP button to mute the tape machine and play some prerecorded filler until the operator switched back to the live program after the crisis had passed.

Art Reed of SCMS's Bradley Division recalls using an RCA RT21 reel-to-reel when he was an engineer at KDKA in the early 1990s. “The machine’s head placement was modified and the length of the tape loop used provided seven seconds of delay. The oxide would quickly be ground off the tape, so the loop was replaced during the top-of-the hour news, when the delay was not in line.”

Delays were also created using endless loop tape cartridge machines; both ITC and Spotmaster had off-the-shelf products for the task.

Delay goes digital

Attempts at improving audio delays include work at Bell Labs in the 1950s using early analog-to-digital and digital-to-analog converters. The first viable digital delay — and the first commercial digital audio processor — was released in 1971. Developed by Francis Lee and Barry Blesser of MIT, the Delta T-101 was a breakthrough product from Lexicon, founded two years earlier. Among Blesser’s other accomplishments are the EMT-250, the first digital reverb introduced in 1976, and co-founding 25-Seven Systems in 2003.

1975 saw Eventide introduce the BD955 profanity delay. This digital box used 16k RAM chips — 160 of them — to deliver a whopping 3.2 seconds of delay.

The need to improve audio time management

Increased sophistication of both programming and listeners puts pressure on stations to better manage their clocks. “Live and local” happens, well, when it happens, and may not comply with the network feed. Stations may want to segue more smoothly between syndicated and local programming. And opportunities to run additional ads in an hour are tough to turn down.

These requirements created the demand for inaudible time compression/expansion, as well as seamless, inaudible transitions between time-processed and real-time audio. These features need to be managed without making operators crazier than they already are.

New transmission technologies present additional challenges. “HD Radio delay is around eight seconds, but there is no precise match among devices,” one highly placed New York City radio engineer reports. “For any number of reasons, things drift and just a few frames is enough to be detectable, primarily on voice.”

HD Radio delay has unique issues for stations that broadcast live sports that want to turn off their HD Radio diversity delay during games so fans at the venue can listen in real time. Getting in and out of this “ball-game” mode means switching the HD Radio signal on and off without annoying listeners or impacting ratings.

FM single frequency networks (SFN) synchronize multiple transmitters, with overlapping footprints, to achieve broad, unbroken coverage. “To optimize performance of SFN, the basic concept is to synchronize everything,” says Nautel’s Chuck Kelly. “In addition to RF carrier frequency and pilot frequency/phase, we’ve got to make sure the audio content amplitude and phase is synchronized.”

How 25-Seven advanced audio delay technology works

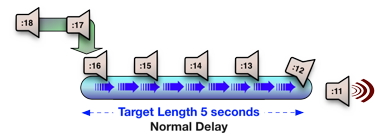

Here’s our audio delay line, shown as a “conveyor belt.” Individual moments of audio come in one end, work their way down the belt, and come out on the other end some time later. In this example, as the :16 mark is injected, second :11 is played out.

Here’s our audio delay line, shown as a “conveyor belt.” Individual moments of audio come in one end, work their way down the belt, and come out on the other end some time later. In this example, as the :16 mark is injected, second :11 is played out.

In real-world radio, stations need to enter and exit this delay, such as when a profanity delay dumps a caller’s inappropriate language or a station enters or exits ballpark mode. Without the advanced audio time compression-expansion algorithms found in 25-Seven products to process those transitions, entering delay will result in repeated audio, while exiting delay will cause audio to be lost.

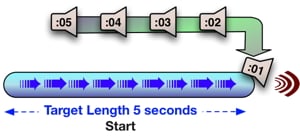

Let’s look at how 25-Seven achieves precise, high-resolution delay and smoothly ramps delay up and down. If you set a 5-second target delay, the full 5-second delay line is reserved. Audio is injected at the end of that line. Second :01 goes in and comes out almost immediately.

Let’s look at how 25-Seven achieves precise, high-resolution delay and smoothly ramps delay up and down. If you set a 5-second target delay, the full 5-second delay line is reserved. Audio is injected at the end of that line. Second :01 goes in and comes out almost immediately.

Then the injection point starts moving earlier, building the delay and gradually making it longer. To avoid gaps or interruptions, it stretches each moment of audio a tiny bit, as they are leaving the delay line “conveyor belt.”

When the injection point reaches the target length, audio is fully delayed. There’s no more need for stretching. The delay then behaves like a fixed digital delay until you choose to exit. To ramp down delay, the injection point moves to later in the delay line, audio is subtly sped up and the delay shrinks until it reaches zero.

When the injection point reaches the target length, audio is fully delayed. There’s no more need for stretching. The delay then behaves like a fixed digital delay until you choose to exit. To ramp down delay, the injection point moves to later in the delay line, audio is subtly sped up and the delay shrinks until it reaches zero.

Many audio professionals equate time compression and expansion with the pitch change that results from making sound waves faster and slower. When audio pitch is changed, especially on voices, it can turn off listeners who may then turn off your station.

Natural, transparent audio using stretch and shrink

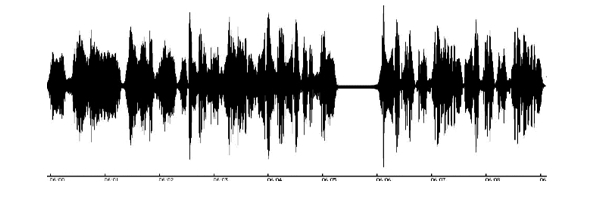

Imagine a sound, in this case an announcer reading copy. The marks below the waveform indicate one second.

An approach used by some systems is to look for pauses — places where the volume falls below a preset threshold — and cut those pauses out. That’s also problematic. It can, as you can see in the following waveform, completely destroy the pacing and delivery that your air talent works so hard to present. Since music rarely has pauses, it is impossible to effectively speed up music this way.

That’s why 25-Seven takes an original approach.

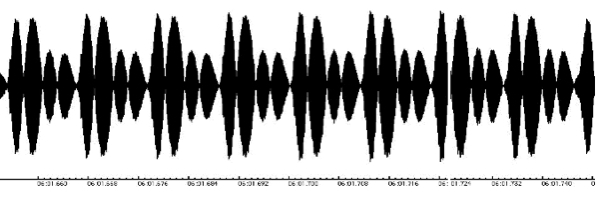

25-Seven squeezes time imperceptibly by working on a much tinier scale—not syllables, words, and pauses, but individual waves. Let’s zoom in on that sound, with each mark representing a millisecond.

Vowels like the one shown above, and sustained musical notes, consist of repeating patterns of waves. 25-Seven’s algorithms recognize these patterns, and — using artificial intelligence — delete just enough repetitions to make the sound faster without making it sound different. Think of the process as making tiny, very precise splices in long, continuous sounds. Each individual wave still takes the same amount of time, so the pitch doesn’t change, but there are slightly fewer waves overall.

Some sounds, including most consonants and almost all percussion instruments, don’t have nicely repeating waves. The algorithms recognize these sounds, and apply psychoacoustic principles to make cuts only where the ear isn’t likely to hear a change. A few kinds of sound, such as the short consonant “T” or the first part of a cymbal crash, don’t get cut at all.

Technically speaking, we use variable-width analysis windows to simultaneously check the pitch, tempo, and spectral characteristics of the audio. Additional algorithms are applied to preserve musical rhythms and stereo placement.

The intelligent analysis and manipulation occurs thousands of times per second. Pauses are analyzed and processed the same way, so everything is kept in proportion and details of breath and pacing are never lost. The result is smooth, natural sounding time compression that is virtually undetectable to listeners.

Graphics and some of the text were appropriated from Jay Rose's awesome user manuals for 25-Seven products. This post first appeared in the July/August 2013 edition of Radio Guide.

Telos Alliance has led the audio industry’s innovation in Broadcast Audio, Digital Mixing & Mastering, Audio Processors & Compression, Broadcast Mixing Consoles, Audio Interfaces, AoIP & VoIP for over three decades. The Telos Alliance family of products include Telos® Systems, Omnia® Audio, Axia® Audio, Linear Acoustic®, 25-Seven® Systems, Minnetonka™ Audio and Jünger Audio. Covering all ranges of Audio Applications for Radio & Television from Telos Infinity IP Intercom Systems, Jünger Audio AIXpressor Audio Processor, Omnia 11 Radio Processors, Axia Networked Quasar Broadcast Mixing Consoles and Linear Acoustic AMS Audio Quality Loudness Monitoring and 25-Seven TVC-15 Watermark Analyzer & Monitor. Telos Alliance offers audio solutions for any and every Radio, Television, Live Events, Podcast & Live Streaming Studio With Telos Alliance “Broadcast Without Limits.”

Recent Posts

Subscribe

If you love broadcast audio, you'll love Telos Alliance's newsletter. Get it delivered to your inbox by subscribing below!