Listeners Love Streaming Audio - So Give Them The Best-Sounding Streams Possible

By Jim Kuzman on Jan 29, 2026 2:03:58 PM

Here's practical guidance for broadcast engineers on improving streaming audio quality, including processing, encoding choices, bitrate management, and loudness control.

(Originally published in Radio World's "Streaming Best Practices" eBook, November, 2025)

|

| Jim Kuzman is a lifelong broadcaster and has been with Telos Alliance since 2011. He serves as the company’s Director of Content, but occasionally sneaks off to lend his ears and audio processing expertise to the Omnia team. |

Radio World: What’s the most important trend in how audio streaming and workflows have evolved for radio?

Jim Kuzman: Streaming listenership is on the rise, and savvy broadcasters are sitting up and taking notice of how and where their audience is “tuning in.” Studio technologies like AoIP (Audio over IP) are ideal for IP-based deliverables like streaming audio. Radio companies that have already embraced it are perfectly poised to deliver high-quality streams, while those who have yet to make the leap now have the perfect reason to get on board. In terms of workflows, broadcasters have never had so many options, ranging from dedicated proprietary hardware to software hosted on bare metal servers to scalable and flexible cloud-based platforms. Whether you’re a single station serving a small community or a large group in a major market, there are technologies and workflows that match your needs.

RW: What role do Telos products play in this ecosystem?

Kuzman: Our streaming products fall into two basic categories: Audio processing and stream encoding, with a fair bit of overlap between the two within our lineup.

For instance, Omnia® Forza HDS is available as a standalone on-premises or cloud-hosted software processor, but it is also a processing option in our Z/IPStream X/20 (software) and Z/IPStream R/20 (hardware) processing and streaming platforms. Z/IPStream also offers an Omnia.9 processing option, while the Omnia.9 itself includes built-in stream encoding for each of its sources. We believe giving our clients processing options for their streams is just as important as for their terrestrial broadcasts.

RW: What techniques or best practices can you share for maintaining audio quality?

Kuzman: Much has changed in the audio world over the past several decades, but one truth remains: Paying attention to the details at every step of the process and every stage of the audio path pays off, whether the content is destined for an analog over-the-air signal, an HD or DAB channel, a live stream, or on-demand listening.

Because streaming audio is often delivered via a lossy codec and podcasts are typically saved in a data-compressed format, maintaining linear or lossless audio for as long as possible reduces the effects of cascading data compression, particularly at lower bitrates. For lower bitrate streams, where the effects of data compression are more audible, adjusting your processing to help mask them - or at the very least, not exaggerate them - can make for a more pleasant-sounding stream with less listener fatigue. Tailoring the EQ and carefully adjusting the middle and upper bands of a processor’s multiband AGCs and limiters is key. Omnia Forza also features our SENSUS® algorithm, which intelligently preconditions audio destined for HD and streaming paths.

Approaching and understanding loudness in the right context is important, too. We all know the benefits of and reasons for building loudness to a certain level for analog AM and FM signals, but as everyone also knows, there are tradeoffs. With streaming audio and podcasts, you are trying to manage and control loudness to meet a certain LUFS target – not build it. This is a huge gift, as it allows you to relax the processing and let the music breathe. You still want consistent levels and a uniform spectral balance, but you’re no longer on the hook to beat up the music purely in the name of competitive loudness. Make the most of that opportunity; your listeners will notice.

RW: What considerations come into play for HD Radio multicasts?

Kuzman: Unlike analog FM, HD Radio isn’t frequency-limited to 15 kHz, doesn’t employ pre-emphasis, and doesn’t rely on hard clipping for peak control. Not having those things working against you helps in terms of audio quality, but HD has its own challenges. Even though HD Radio uses a lossy codec, if the primary channel is fed linear audio through an uncompressed path and is allotted all of the available bits, there are minimal audible artifacts. When you start adding HD sub-channels, you’re slicing the metaphorical pie into smaller and smaller pieces. All of the channels must be run at lower bitrates to make room, including the primary HD path. This is analogous to running streaming audio at reduced bitrates, so the same recommendations for adjusting your processing to help mitigate the audible effects apply here. We’re always available to help people balance the tradeoffs.

RW: What should streamers know about encoding formats?

Kuzman: The two primary considerations are compatibility and the tradeoff between audio quality and bitrate.

For lossy formats, MP3 has a slight edge for compatibility, as all popular modern web browsers, including Chrome, Safari, Edge, Firefox, and Opera, natively support it on desktop or mobile. AAC runs a very close second, though Firefox introduces a caveat or two depending on the operating system. Ogg Vorbis can deliver very high quality at high bitrates and is recognized by most web browsers, but isn’t as efficient as AAC. Lossless formats such as FLAC, WAV, AIFF, and ALAC are available, but they do not enjoy the same level of near-universal compatibility, and by their very nature are not nearly as efficient as their lossy counterparts.

Like so many things in the audio world, compromises and tradeoffs are lurking around every corner. The most significant one for streaming is accepting lower audio quality for the sake of using less bandwidth, or, conversely, using more data and a bigger pipeline in exchange for better fidelity. AAC, which includes several variants optimized for low-bit-rate streams, comes out ahead in terms of efficiency, as it requires roughly half the bandwidth of MP3 to deliver the same perceived audio quality. That gives you the choice of using AAC to either improve your sound at the same given bitrate, or achieve an equivalent sound at half the bitrate compared to the same stream using MP3.

Streamers should also consider how listeners consume their content. A 320 kbps AAC stream might be appropriate for critical at-home music listening on higher-end speakers or headphones, and be very much appreciated by a discerning audience, but it is overkill for delivering a podcast through a smartphone speaker using mobile data. Many streamers address this by providing listeners with both a lower bitrate and a higher quality option.

RW: How can a station that is streaming match levels among different sources?

Kuzman: Mismatched audio levels - specifically commercials that are louder than the main program content - is one of the top complaints from listeners. It applies to television, streaming video services, on-demand content, and most definitely streaming audio. No one enjoys having to turn up the volume to understand what someone is saying and then scrambling two minutes later to turn down a blaring commercial! The same is true when transitioning from one song to another. The audience expects to set their volume once for a given session and be done, and rightfully so. There are two primary ways to ensure consistent levels across sources: Real-time processing and file-based processing.

Using a dedicated real-time processor is not dissimilar to what radio stations have been doing for decades to smooth out differences in loudness and, in most cases, provide spectral consistency. The most important consideration with the real-time approach is to use a processor specifically designed for streaming audio, ideally one where you can set an output loudness target for a specific LUFS. If you are tempted to feed your streaming encoders with the output of your FM processor to save time or money, don’t. It’s the fastest path to an awful-sounding stream that will drive listeners to your competition.

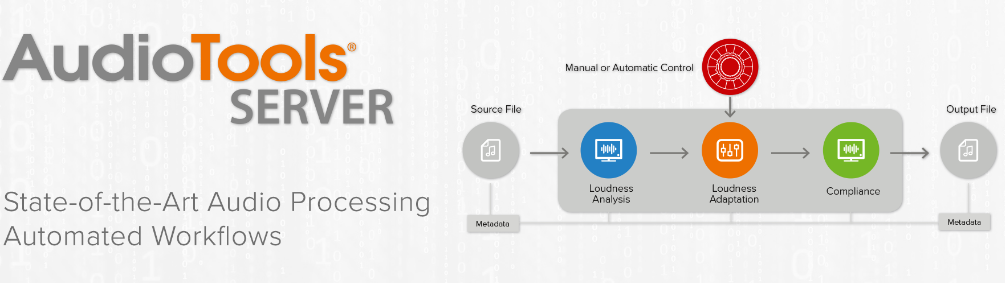

File-based processors such as our Minnetonka AudioTools® Server allow you to achieve uniform loudness and create your signature sound across your entire library, and are worthy of serious consideration for streamers. They provide automatic, faster-than-real-time batch processing using watch folders and specific pre-determined workflows, which can be a real time-saver and deliver very consistent results.

RW: What else should we know?

Kuzman: If I had to pick one takeaway from all of this, it would be that taking the time to do streaming right and treating it with the same care as your terrestrial signal is a must if you intend to build and keep an audience. Streaming audio is on the rise. Your listeners are already there, and they deserve the best-sounding stream you can give them.

More Topics: Streaming Audio, stream processing, 2026

Recent Posts

Subscribe

If you love broadcast audio, you'll love Telos Alliance's newsletter. Get it delivered to your inbox by subscribing below!