What the Heck, Where's the Tech? | Telos Alliance

By The Telos Alliance Team on May 10, 2017 3:32:16 PM

What the Heck, Where's the Tech?

ATSC 3.0 Next Generation Audio (NGA) System Demystified

Technology truly is a train that just keeps on rolling. With the world preparing for yet another advancement in personalized entertainment experience, acronyms are being thrown around like dice on the craps table. What does it all mean and how will any of it benefit the consumer?

Technology truly is a train that just keeps on rolling. With the world preparing for yet another advancement in personalized entertainment experience, acronyms are being thrown around like dice on the craps table. What does it all mean and how will any of it benefit the consumer?

Let’s take a deep dive into what a Next Generation Audio System can really do by looking at what’s happening in South Korea.

Broadcasters in South Korea are deploying a new broadcast system in time for the upcoming Winter Games, which will take place in Pyeong Chang next February. This new broadcast system supports UHD video along with a next-generation audio format, MPEG-H, which can provide viewers with a personalized experience along with immersive (3D) audio.

The advanced tools required to author, validate, monitor, and create these advanced audio programs supported by the new broadcast format largely do not exist yet, leading to question: What the Heck, Where’s the Tech? Here, we go deep into demystifying the ATSC 3.0 Next-Generation Audio (NGA) System.

MPEG-H – The Acronym That Delivers!

The features offered by MPEG-H are a departure from existing formats, which require complete mixes to be delivered in fixed channel formats to the end viewer. With MPEG-H, the audio can be delivered as sub-mixes or individual elements. The next generation Set Top Box (STB), TV, or AV receiver creates the final mix for the viewer based upon options provided by the program producer and the viewer’s own preferences.

Audio for this system can be made up of the traditional, channel-based formats in fixed locations that we are all used to (Front Left, Front Right, Center, LFE, Left Surround, Right Surround, etc.), but now offers the option of adding up to 4 overhead channels as well. In addition to new overhead channels, object-based audio is also supported, and the individual objects may be located anywhere within the 3D space. Programs broadcast in MPEG-H are able to be created using either, or a blend, of these approaches.

One key and very important aspect of all of this is that new and additional forms of metadata are required to support the features available in MPEG-H and to enable the “receiver mix” function.

Wait For It ... The Solution Is Here!

New systems for authoring and verifying the metadata are necessary. While some of the tools necessary to author metadata in the file-based domain during post production exist, real-time devices for monitoring or verifying the audio content and metadata in various parts of the broadcast chain are also required. Similarly, devices that will author the metadata in real-time during live broadcasts are also necessary.

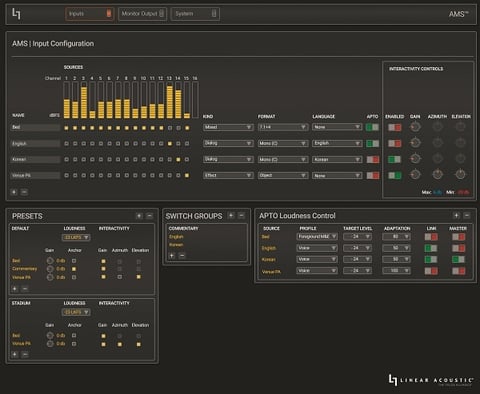

The Linear Acoustic AMS Authoring and Monitoring System, recipient of a “Best of Show” award at NAB 2017, is a comprehensive solution for the real-time authoring, rendering, and monitoring of advanced MPEG-H audio programs in real-time. The AMS simultaneously delivers advanced audio with metadata supporting the features of MPEG-H along with discrete 5.1-ch and 2-ch rendered outputs audio for legacy ATSC 1.0 broadcasts or mobile/OTT services. A separate monitoring output is included which provides the user with the ability to monitor authored content with its metadata or the rendered outputs, in a variety of listening configurations from mono up through 7.1+4.

The AMS provides the authoring engineer with a simple method to build presets of different listening experiences. This ultimately enables viewers to quickly choose and personalize their sound experience for optimal playback in their environments, from mobile devices up through immersive home theatres.

For example, the different listening experiences could be various audio perspectives for a sporting event, such as either being seated higher in the stands or closer to the field of play; or whether they want to include the overhead ambience channels in their final mix or just keep the audio to the 5.1-channels at ear level that we are used today.

The authoring engineer is also able to create Switch Groups which allow the viewer to easily select which language or team announcer they wish to hear. This feature ensures that all viewers are able to hear the audio in as many channels as are transmitted regardless of which language or team announcer they are listening to, which is not always the case today.

"The AMS provides the authoring engineer with a simple method to build presets of different listening experiences. This ultimately enables viewers to quickly choose and personalize their sound experience for optimal playback in their environments, from mobile devices up through immersive home theatres."

MPEG-H also offers some interactivity controls to the viewer, which the authoring engineer has full control over. They can enable and adjust the range of control operation available to the viewer. One of these controls is the ability to adjust the gain of individual elements or groups. Allow a hearing-impaired viewer to adjust the gain of the dialog relative to the background audio can provide them with a much better experience without consuming additional bandwidth in the transport stream creating a separate hearing impaired service. Interactivity controls for the position of audio objects are also available. The authoring engineer can establish a range of vertical (height) and horizontal (azimuth) positions that the viewer is allowed to move objects within. Perhaps one viewer would like to position the audio from the basketball venue’s PA system directly overhead, providing them with a more immersive audio experience, and another viewer would like to keep the venue PA audio in the center channel. The authoring engineer is able to provide both viewers with the experience they prefer simply by providing the viewer with controls that they are able to adjust within the limits established by the authoring engineer and program producer.

The AMS interface provides the operator with very quick and easy to configure these MPEG-H controls, along with additional features as well. Using a joystick controller, the operator can create dynamic metadata for objects, panning them around in the 3D space.

In addition to all of the MPEG-H features supported by AMS, it provides additional functionality which every audio engineer finds useful.

AMS is equipped with Linear Acoustic APTO™, a state-of-the-art loudness adaptation technology designed to carefully control audio levels in a way that preserves the transients, sonic image and artistic intent of the source, while ensuring loudness consistency and compliance for any desired target. Each input audio group has its own APTO processing engine with individual controls, allowing the operator to deliver audio which is compliant with their local regulations while still sounding very natural and unprocessed. Processing the elements of a mix independently provides for a much more natural sounding final mix than using one “sledgehammer” trying to keep everything in range.

AMS is equipped with Linear Acoustic APTO™, a state-of-the-art loudness adaptation technology designed to carefully control audio levels in a way that preserves the transients, sonic image and artistic intent of the source, while ensuring loudness consistency and compliance for any desired target. Each input audio group has its own APTO processing engine with individual controls, allowing the operator to deliver audio which is compliant with their local regulations while still sounding very natural and unprocessed. Processing the elements of a mix independently provides for a much more natural sounding final mix than using one “sledgehammer” trying to keep everything in range.

Multiple loudness meters are provided allowing the operator to ensure that the various presets and outputs are all at the desired or mandated levels. Additionally, loudness data may be logged to a file with user-selectable parameters for log intervals and contents. A variety of loudness parameters of each preset program is displayed on the web interface to allow easy and compliant mixing operations.

From a product design standpoint, AMS is as technologically advanced as are the audio features it supports. In any broadcast environment, engineers must be able to deal with a multitude of I/O configurations. The Telos Alliance xNode family of AoIP devices allow Linear Acoustic AMS to be configured with optional analog, AES/EBU, GPIO logic, and SDI I/O, all with full Livewire+ AES67 Audio over IP support.

Telos Alliance SDI xNodes can de-embed 3G/HD/SD-SDI inputs, extracting up to 16 channels of audio to the Livewire+ AES67 port. The audio can be re-embedded into the SDI output stream with full video delay compensation for each SDI input, ensuring that audio video synchronization is maintained. Support for UHD video is achieved by using two 3G SDI xNodes in a quad-link configuration.

All Telos Alliance xNodes are fanless, so there are no complaints when installing them in an audio control room. This allows the AMS processing engine to be installed in a machine room alongside other devices which use active cooling with a simple managed network connection linking the units together.

Linear Acoustic AMS can be used in production environments to actively author and monitor metadata, or in other parts of the broadcast chain to monitor and validate audio streams containing a control track which were authored upstream. In both scenarios, AMS is capable of outputting 15-channels of discrete audio with a metadata control track, a 5.1-channel rendered output, 2-channel rendered output, and a dedicated monitoring output, all simultaneously.

Full monitoring controls are available to the operator to check individual source elements, or to emulate the consumer listening experience for any of the programs and presets while not affecting the other 3 outputs. The operator can choose to listen to speaker configurations from mono up through 7.1.4 at the monitoring output, and at the press of a button, change the output configuration in order to emulate what the viewer at home will experience.

Using the web interface, up to 36 outputs may be configured, including 16 channels of authored audio plus control channels, two additional rendered outputs, and up to 12 channels for monitoring. Outputs may be routed to an SDI xNode for embedding or to any AES67 compatible device.

The basic AMS™ system configuration consists of the following:

One main processing unit which implements the following:

- MPEG-H authoring, rendering and monitoring functions

- 16 channel audio input via Livewire+ AES67 over RJ-45 Ethernet

- 36 channel audio output via Livewire+ AES67 including authored audio plus control channel, 5.1- and 2-ch rendered outputs, and monitor output

- Web hosting of the user interface

2 x Telos Alliance 3G SDI xNode interfaces, each of which support:

- Two relay-bypassed 3G/HD/SD-SDI paths with access to all audio channels

- Compensating video delays

Optional Controller support includes:

- Industry standard Windows and Mac OS web browsers

- Touch screen devices

- Mobile devices

- Hardware control panels

- 3rd party Joystick controllers for object positioning

If you’ve made this far, we will leave you with this list of funny acronyms … thanks for your interest!

Further Reading

To learn more about the Linear Acoustic Authoring & Monitoring System and ATSC 3.0, check out these pages:

Linear Acoustic AMS Authoring & Monitoring System product page

Telos Alliance has led the audio industry’s innovation in Broadcast Audio, Digital Mixing & Mastering, Audio Processors & Compression, Broadcast Mixing Consoles, Audio Interfaces, AoIP & VoIP for over three decades. The Telos Alliance family of products include Telos® Systems, Omnia® Audio, Axia® Audio, Linear Acoustic®, 25-Seven® Systems, Minnetonka™ Audio and Jünger Audio. Covering all ranges of Audio Applications for Radio & Television from Telos Infinity IP Intercom Systems, Jünger Audio AIXpressor Audio Processor, Omnia 11 Radio Processors, Axia Networked Quasar Broadcast Mixing Consoles and Linear Acoustic AMS Audio Quality Loudness Monitoring and 25-Seven TVC-15 Watermark Analyzer & Monitor. Telos Alliance offers audio solutions for any and every Radio, Television, Live Events, Podcast & Live Streaming Studio With Telos Alliance “Broadcast Without Limits.”

Topics: Television Audio, Linear Acoustic AMS, Next Generaton Audio

Recent Posts

Subscribe

If you love broadcast audio, you'll love Telos Alliance's newsletter. Get it delivered to your inbox by subscribing below!